K8S cluster on AWS EC2

使用說明

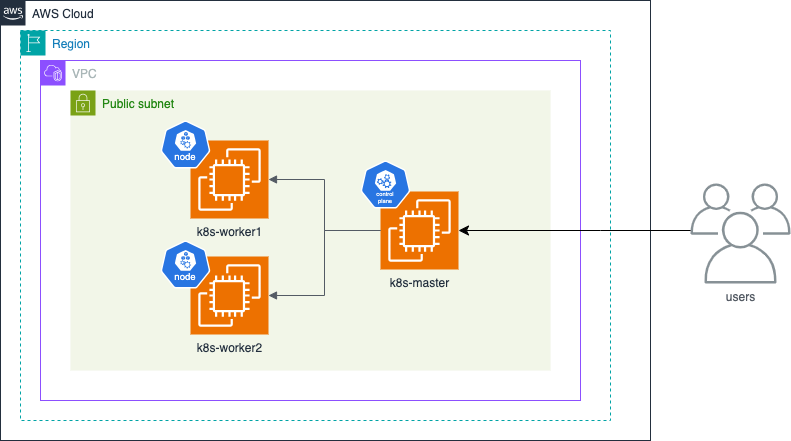

建置架構

目錄結構

Terraform-Bulding-K8S

├── main.tf

├── outputs.tf

├── variables.tf

└── script

├── install_k8s_msr.sh

└── install_k8s_wrk.sh

組態檔內容

main.tf

provider "aws" {

region = var.region

}

#****** VPC Start ******#

resource "aws_vpc" "some_custom_vpc" {

cidr_block = "10.0.0.0/16"

tags = {

Name = "K8S VPC"

}

}

resource "random_shuffle" "az" {

input = ["${var.region}a", "${var.region}c", "${var.region}d"]

result_count = 1

}

resource "aws_subnet" "some_public_subnet" {

vpc_id = aws_vpc.some_custom_vpc.id

cidr_block = "10.0.1.0/24"

availability_zone = random_shuffle.az.result[0]

tags = {

Name = "K8S Subnet"

}

}

resource "aws_internet_gateway" "some_ig" {

vpc_id = aws_vpc.some_custom_vpc.id

tags = {

Name = "K8S Internet Gateway"

}

}

resource "aws_route_table" "public_rt" {

vpc_id = aws_vpc.some_custom_vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.some_ig.id

}

route {

ipv6_cidr_block = "::/0"

gateway_id = aws_internet_gateway.some_ig.id

}

tags = {

Name = "Public Route Table"

}

}

resource "aws_route_table_association" "public_1_rt_a" {

subnet_id = aws_subnet.some_public_subnet.id

route_table_id = aws_route_table.public_rt.id

}

resource "aws_security_group" "k8s_sg" {

name = "K8S Ports"

vpc_id = aws_vpc.some_custom_vpc.id

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 6443

to_port = 6443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 2379

to_port = 2380

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 10250

to_port = 10250

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 30000

to_port = 32767

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = -1

cidr_blocks = ["0.0.0.0/0"]

}

}

#****** VPC END ******#

resource "random_string" "s3name" {

length = 9

special = false

upper = false

lower = true

}

resource "aws_s3_bucket_acl" "s3_bucket_acl" {

bucket = aws_s3_bucket.s3buckit.id

acl = "private"

depends_on = [aws_s3_bucket_ownership_controls.s3_bucket_acl_ownership]

}

resource "aws_s3_bucket_ownership_controls" "s3_bucket_acl_ownership" {

bucket = aws_s3_bucket.s3buckit.id

rule {

object_ownership = "ObjectWriter"

}

}

resource "aws_s3_bucket" "s3buckit" {

bucket = "k8s-${random_string.s3name.result}"

force_destroy = true

depends_on = [

random_string.s3name

]

}

resource "aws_iam_role" "k8s_s3_role" {

name = "k8s_s3_role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

tags = {

tag-key = "tag-value"

}

}

resource "aws_iam_instance_profile" "k8s_s3_profile" {

name = "k8s_s3_profile"

role = "${aws_iam_role.k8s_s3_role.name}"

}

resource "aws_iam_role_policy" "k8s_s3_policy" {

name = "k8s_s3_policy"

role = "${aws_iam_role.k8s_s3_role.id}"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:*"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

EOF

}

resource "aws_instance" "ec2_instance_msr" {

ami = var.ami_id

subnet_id = aws_subnet.some_public_subnet.id

instance_type = var.instance_type

key_name = var.ami_key_pair_name

associate_public_ip_address = true

iam_instance_profile = "${aws_iam_instance_profile.k8s_s3_profile.name}"

security_groups = [ aws_security_group.k8s_sg.id ]

root_block_device {

volume_type = "gp2"

volume_size = "8"

delete_on_termination = true

}

tags = {

Name = "k8s_msr_1"

}

user_data_base64 = base64encode("${templatefile("scripts/install_k8s_msr.sh", {

region = var.region

s3buckit_name = "k8s-${random_string.s3name.result}"

})}")

depends_on = [

aws_s3_bucket.s3buckit,

random_string.s3name

]

}

resource "aws_instance" "ec2_instance_wrk" {

ami = var.ami_id

count = var.number_of_worker

subnet_id = aws_subnet.some_public_subnet.id

instance_type = var.instance_type

key_name = var.ami_key_pair_name

associate_public_ip_address = true

iam_instance_profile = "${aws_iam_instance_profile.k8s_s3_profile.name}"

security_groups = [ aws_security_group.k8s_sg.id ]

root_block_device {

volume_type = "gp2"

volume_size = "8"

delete_on_termination = true

}

tags = {

Name = "k8s_wrk_${count.index + 1}"

}

user_data_base64 = base64encode("${templatefile("scripts/install_k8s_wrk.sh", {

region = var.region

s3buckit_name = "k8s-${random_string.s3name.result}"

worker_number = "${count.index + 1}"

})}")

depends_on = [

aws_s3_bucket.s3buckit,

random_string.s3name,

aws_instance.ec2_instance_msr

]

}

variables.tf

variable "access_key" { #Todo: uncomment the default value and add your access key.

description = "Access key to AWS console"

default = ""

}

variable "secret_key" { #Todo: uncomment the default value and add your secert key.

description = "Secret key to AWS console"

default = ""

}

variable "ami_key_pair_name" { #Todo: uncomment the default value and add your pem key pair name. Hint: don't write '.pem' exction just the key name

default = "aws-ec2-apn1-k8s"

}

variable "number_of_worker" {

description = "number of worker instances to be join on cluster."

default = 2

}

variable "region" {

description = "The region zone on AWS"

default = "ap-northeast-1" #The zone I selected is us-east-1, if you change it make sure to check if ami_id below is correct.

}

variable "ami_id" {

description = "The AMI to use"

default = "ami-07c589821f2b353aa" #Ubuntu 20.04

}

variable "instance_type" {

default = "t2.medium" #the best type to start k8s with it,

}

output.tf

output "instance_msr_public_ip" {

description = "Public address IP of master"

value = aws_instance.ec2_instance_msr.public_ip

}

output "instance_wrks_public_ip" {

description = "Public address IP of worker"

value = aws_instance.ec2_instance_wrk.*.public_ip

}

# output "instance_msr_privte_ip" {

# description = "Private IP address of master"

# value = aws_instance.ec2_instance_msr.private_ip

# }

# output "s3_bucket_name" {

# description = "The S3 bucket name"

# value = "k8s-${random_string.s3name.result}"

# }

install_k8s_msr.sh

#!/bin/bash

hostname k8s-msr-1

echo "k8s-msr-1" > /etc/hostname

export AWS_ACCESS_KEY_ID=${access_key}

export AWS_SECRET_ACCESS_KEY=${private_key}

export AWS_DEFAULT_REGION=${region}

echo "[TASK 1] Disable and turn off SWAP"

sudo sed -i '/swap/d' /etc/fstab

sudo swapoff -a

echo "[TASK 2] Stop and Disable firewall"

sudo systemctl disable --now ufw >/dev/null 2>&1

echo "[TASK 3] Install AWS CLI"

apt update

apt install awscli -y

echo "[TASK 4] Enable and Load Kernel modules"

cat >>/etc/modules-load.d/containerd.conf<<EOF

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

echo "[TASK 5] Add Kernel settings"

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 6] Install containerd runtime"

mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt -qq update >/dev/null 2>&1

apt install -qq -y containerd.io >/dev/null 2>&1

containerd config default >/etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

sudo systemctl enable containerd >/dev/null 2>&1

echo "[TASK 7] Add apt repo for kubernetes"

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - >/dev/null 2>&1

apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main" >/dev/null 2>&1

echo "[TASK 8] Install Kubernetes components (kubeadm, kubelet and kubectl)"

apt install -qq -y kubeadm=1.28.0-00 kubelet=1.28.0-00 kubectl=1.28.0-00 >/dev/null 2>&1

echo "[TASK 9] Kubernetes cluster init"

export ipaddr=`ip address|grep eth0|grep inet|awk -F ' ' '{print $2}' |awk -F '/' '{print $1}'`

export pubip=`dig +short myip.opendns.com @resolver1.opendns.com`

kubeadm init --apiserver-advertise-address=$ipaddr --pod-network-cidr=172.16.0.0/16 --apiserver-cert-extra-sans=$pubip > /tmp/restult.out

cat /tmp/restult.out

echo "[TASK 10] to get join commnd"

tail -2 /tmp/restult.out > /tmp/join_command.sh;

aws s3 cp /tmp/join_command.sh s3://${s3buckit_name};

#this adds .kube/config for root account, run same for ubuntu user, if you need it

sudo mkdir -p /root/.kube;

sudo cp -i /etc/kubernetes/admin.conf /root/.kube/config;

sudo cp -i /etc/kubernetes/admin.conf /tmp/admin.conf;

sudo chmod 755 /tmp/admin.conf

echo "[TASK 11] Add kube config to ubuntu user"

sudo mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#to copy kube config file to s3

# aws s3 cp /etc/kubernetes/admin.conf s3://${s3buckit_name}

echo "[TASK 12] Install Pod Network"

sudo curl -o /root/kube-flannel.yml https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

sleep 5

sudo sed -i "s/10.244.0.0/172.16.0.0/g" /root/kube-flannel.yml

sudo sed -i "/mgr/a\ - --iface=eth0" /root/kube-flannel.yml

sudo kubectl --kubeconfig /root/.kube/config apply -f /root/kube-flannel.yml

sudo systemctl restart kubelet

echo "[TASK 13] Apply kubectl Cheat Sheet Autocomplete"

source <(kubectl completion bash) # set up autocomplete in bash into the current shell, bash-completion package should be installed first.

echo "source <(kubectl completion bash)" >> /home/ubuntu/.bashrc # add autocomplete permanently to your bash shell.

echo "source <(kubectl completion bash)" >> /root/.bashrc # add autocomplete permanently to your bash shell.

alias k=kubectl

complete -o default -F __start_kubectl k

echo "alias k=kubectl" >> /home/ubuntu/.bashrc

echo "alias k=kubectl" >> /root/.bashrc

echo "complete -o default -F __start_kubectl k" >> /home/ubuntu/.bashrc

echo "complete -o default -F __start_kubectl k" >> /root/.bashrc

install_k8s_wrk.sh

#!/bin/bash

######### ** FOR WORKER NODE ** #########

hostname k8s-wrk-${worker_number}

echo "k8s-wrk-${worker_number}" > /etc/hostname

export AWS_ACCESS_KEY_ID=${access_key}

export AWS_SECRET_ACCESS_KEY=${private_key}

export AWS_DEFAULT_REGION=${region}

echo "[TASK 1] Disable and turn off SWAP"

sudo sed -i '/swap/d' /etc/fstab

sudo swapoff -a

echo "[TASK 2] Stop and Disable firewall"

sudo systemctl disable --now ufw >/dev/null 2>&1

echo "[TASK 3] Install AWS CLI"

apt update

apt install awscli -y

echo "[TASK 4] Enable and Load Kernel modules"

cat >>/etc/modules-load.d/containerd.conf<<EOF

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

echo "[TASK 5] Add Kernel settings"

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 6] Install containerd runtime"

mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt -qq update >/dev/null 2>&1

apt install -qq -y containerd.io >/dev/null 2>&1

containerd config default >/etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

sudo systemctl enable containerd >/dev/null 2>&1

echo "[TASK 7] Add apt repo for kubernetes"

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - >/dev/null 2>&1

apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main" >/dev/null 2>&1

echo "[TASK 8] Install Kubernetes components (kubeadm, kubelet and kubectl)"

apt install -qq -y kubeadm=1.28.0-00 kubelet=1.28.0-00 kubectl=1.28.0-00 >/dev/null 2>&1

echo "[TASK 9] Join master node"

sleep 1m

aws s3 cp s3://${s3buckit_name}/join_command.sh /tmp/.

chmod +x /tmp/join_command.sh

bash /tmp/join_command.sh

Terraform 指令

- terraform init

- terraform validate

- terraform apply

- terraform show

- terraform destroy